Personal feedback can be such an uncomfortable subject! We do all we can to avoid being in the hot seat. It’s hard enough to try to break old habits when it comes to myself, but it’s even more difficult to try to convince others to accept feedback. While working with HR to launch a new survey run for their managers, I try to reiterate the important role these feedback participants have in receiving a well-rounded report. The participant loses the opportunity to gain valuable insights into their leadership development when at the end of the process they get an incomplete report. I compared both types of reports with my client and explained some helpful ways that’ll be a win-win situation for them and for the managers.

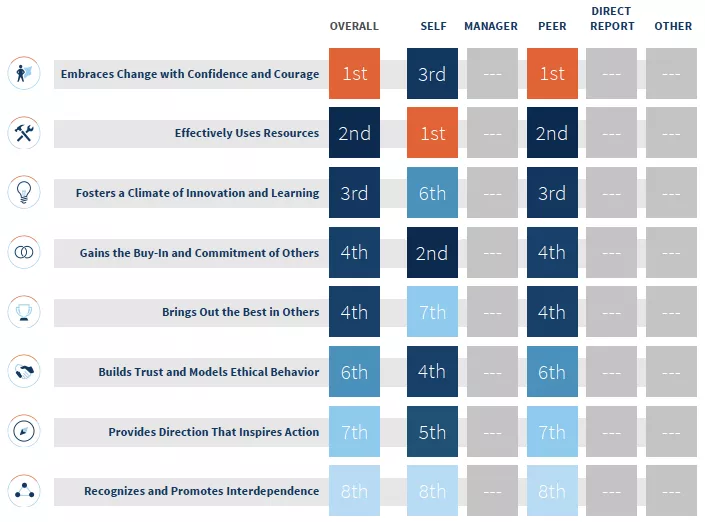

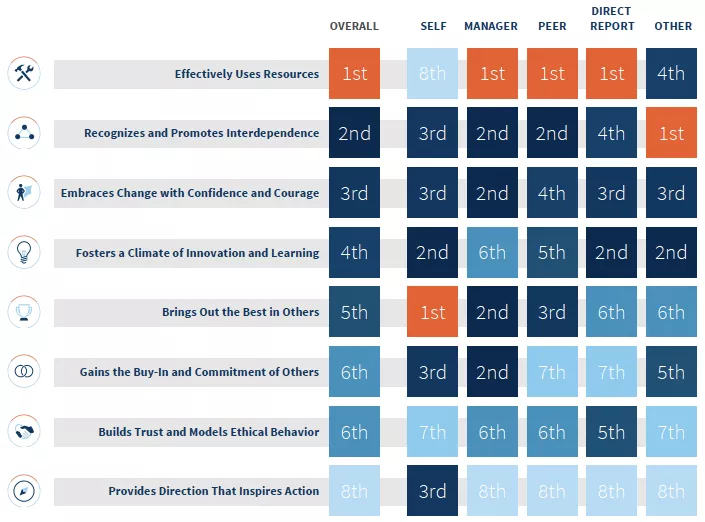

Sample heatmaps with and without all rater categories.

The following two examples show the difference in the robustness of the data when there are enough respondents in every rater category.

A. Report with MISSING Rater Categories

Report A – With the majority of rater categories missing, the participant only sees the Peer’s perspective in comparison to how they rated themselves.

B. Report with All Rater Categories

Report B – The participant received feedback from all categories so they see how their Self-ratings compare to the rest of the group as well as their Manager.

Help participants catch the vision.

Before the survey launch, we recommend that participants (those receiving feedback) are informed in advance about the benefits of completing a 360. If they receive encouragement from their organization that this will not be used as a performance review but as a way to help them grow professionally, they will more likely make this a priority. One of my clients would remind his employees that their 360s would not affect their performance review at all. He wanted them to know that this tool was strictly for the development of their leaders. The data would only be shared between them and their manager.

The goal is to help participants understand why this process should matter to them. We hope they treat 360 feedback as a forward-looking career development tool. It is about where they want to go next, not about what they have done. It demonstrates a path forward that they can take to achieve their career aspirations. If participants look at feedback as a threat to their compensation overall, they get into the mode of picking raters who will give them more positive feedback. The last thing we want is for these individuals receiving feedback to fight the survey process from the beginning and focus solely on looking good instead of looking for improvement.

Who will be their raters?

We recommend participants determine their own raters, because they will trust the results more than if their respondents are selected for them. Completing the self-survey first can help participants figure out who to add as their raters since their raters will usually be answering the same set of questions.

Below is an example of rater categories displayed in a report and the recommended number of raters:

Rater Categories

Manager (1 recommended)

The person you directly report to.

Peer (5-8 recommended)

Individuals at a similar level with similar responsibilities across the organization.

Direct Reports (All)

All Individuals who report directly to you.

Other (5-8 recommended)

Clients, former colleagues, mentors/collaborators, anyone else you worked closely with in the past.

These categories group individuals they interact with in similar ways. These rater categories can help identify the differences in the way the participant relates to each of these groups. Some participants may not have any direct reports. We recommend they add former direct reports or leave that category blank.

The recommended number of raters for each category is there so a participant can receive enough feedback. At LearningBridge we take whatever degree of anonymity that was promised very seriously. Thus, in most cases a category with fewer than 3 complete will combine with another rater category to preserve anonymity. To the extent possible, we want to avoid groupings in a report. We want to help participants receive feedback from each category for a more robust report overall.

How should they communicate with their raters?

As a courtesy and to increase the likelihood of a response, we recommend that participants give their respondents a heads-up about the survey. This will avoid any questions that might come up about the legitimacy of the request for feedback. (It’s also a great idea for participants to send a thank-you note to their respondents after the survey is complete.) Here’s an example of an initial heads-up email:

Hello (Respondent Name),

I’m seeking to understand more about my personal strengths and development areas. To do this, I’m asking a number of people that work with me to provide feedback via a short survey (approx. 20 mins) called the Leadership Inventory.

As someone whose point of view I really value, I’d very much appreciate you helping me with this element of my leadership development journey. Let me know in case of any questions. You will receive an email from the survey host, LearningBridge, with the link to complete the survey.

Thanks for your support.

/Name

As you see, taking a few simple steps can go a long way toward achieving a final report with robust data. It is ultimately our hope that each individual will take away a valuable insight from their data and others will follow, because they’ll see feedback as an opportunity to gauge whether or not they are going in the right direction professionally and personally.